AI models without access to information and tools are really just stochastic parrots. They generate text better and faster with each newly released model, but that's pretty much it. Of course, this is a vast simplification and they are still remarkably capable, but to turn them into something significantly more valuable that is tailored to your specific use case, you have to give them tools.

These tools can be anything; fetching information is just the tip of the iceberg. You can define tools that send emails, create calendar invites, start build pipelines, generate images, transcribe text, read from a file on your server, write a Slack message, and anything you can think of.

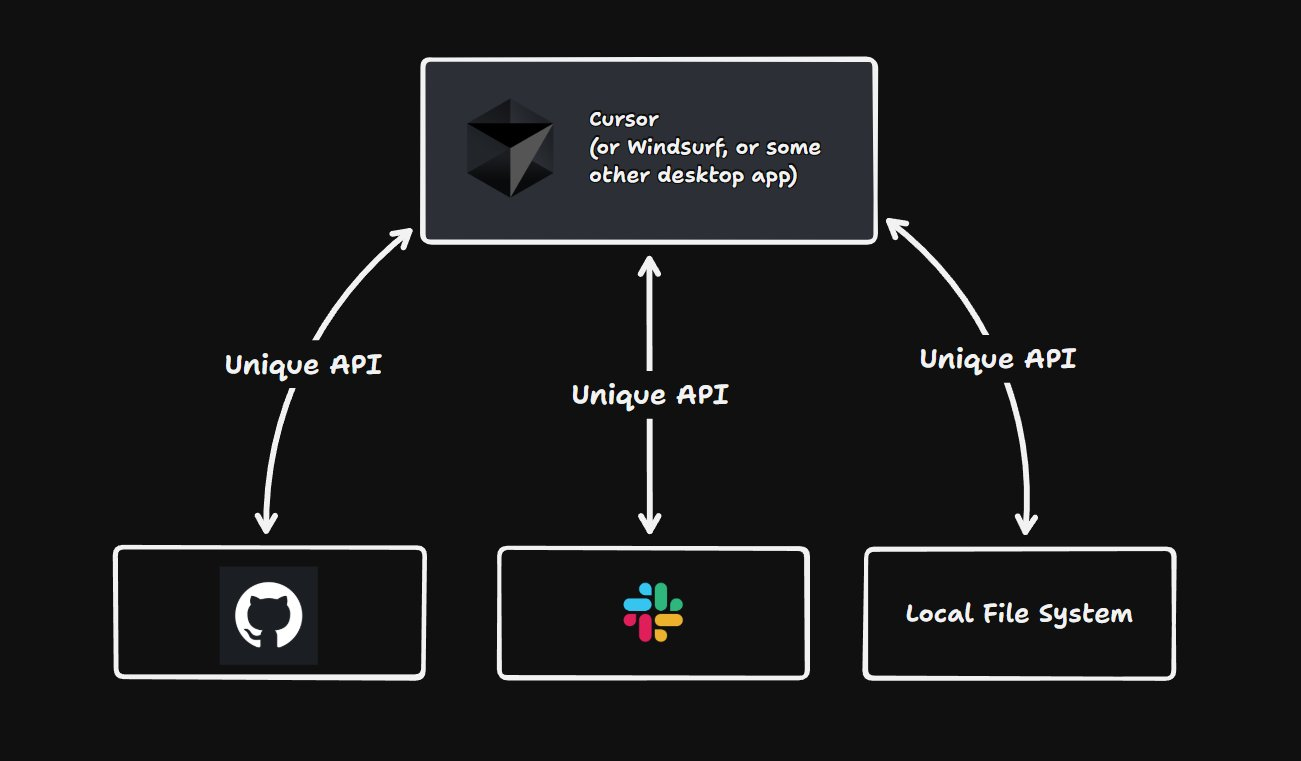

The problem with these? Almost all of them have a different API, and you must integrate them individually into your model. Adding a new tool to your model requires you to write lots of boilerplate code before it can be used.

Image from AIhero. Check out the MCP blog post over there for more information.

This is the problem that MCP servers aim to solve.

Model Context Protocol

Anthropic was the first to use the term Model Context Protocol (or MCP) on November 25, 2024, when they open-sourced it to the public. So what exactly is the Model Context Protocol?

MCP is an open standard that tries to standardize how AI systems can access data sources and tools. Think of it like an ORM, but instead of connecting your code to your database instance or multiple database replicas in a simplified way, it connects your AI system to the tools it needs to operate.

Image generated by Imgflip

The key here is that MCP tries to abstract away all the complexity you would face if you integrated the required APIs manually. It provides a unified API.

Okay, but how does it work?

You might ask.

How Does MCP Work?

A Model Context Protocol architecture consists of a client (or multiple clients) and a server (or multiple servers). In this case, the client is your AI application - called an MCP client, and the server is with which the client communicates using the Model Context Protocol.

The server can be hosted by a company that wants to make its API available through the standard so that developers will start building AI apps on top of it, or you can self-host it.

If you self-host MCP servers, you also have multiple options. One option is to build an MCP server using the Python, TypeScript, or any other SDKs available in the repository. However, you have simply moved development to a different layer in this case. Although your AI application can communicate with your MCP server in a unified way, you still have to develop the MCP server itself.

However, you have another option. There are multiple prebuilt MCP servers out there that you can spin up in seconds. You only need Docker. The caveat in this case is that these are individual MCP servers for each API. Their output is unified, but you need multiple of them if you'd like to integrate various services.

Here is a comparison.

Manually developing an MCP server using one of the SDKs

Pros

- You can implement multiple providers into your server

- You are probably good with a single MCP server

Cons

- You have to implement the logic yourself

Using a prebuilt MCP server

Pros

- You can spin it up in seconds

- No coding required

Cons

- You will probably need multiple MCP servers

How to Speak to Multiple MCP Servers?

I will be honest: I told you a white lie when I mentioned above that the architecture is as simple as a client and a server. But I promise I only did it to help you understand the concept as fast as possible.

In reality, the AI application you are building is called the host, which is able to spin up multiple MCP clients, each being connected to a single MCP server. A single client is connected to a single server. Servers are isolated and cannot communicate with each other, and your AI application, the host, manages clients.

Why Doesn't The Host Directly Connect to The Server?

Good question! And the answer is pretty straightforward. Clients are responsible for managing the connections to the MCP server, including the state, protocol negotiation, protocol messages, security boundaries, and more.

This way, hosts only have to care about the results.

Other Benefits of MCP

Another good thing about MCP architecture is that concerns are separated. Your API key for a specific service won't be exposed to the client because the server handles it. And that is true for each of the MCP servers you are using.

You only have to care about the connection to the server. And this is the point where I think the concept lacks something: authentication and authorization.

You are free to implement a custom solution between your client and server, but as of the time of this post, the protocol does not specify a way to do it natively.

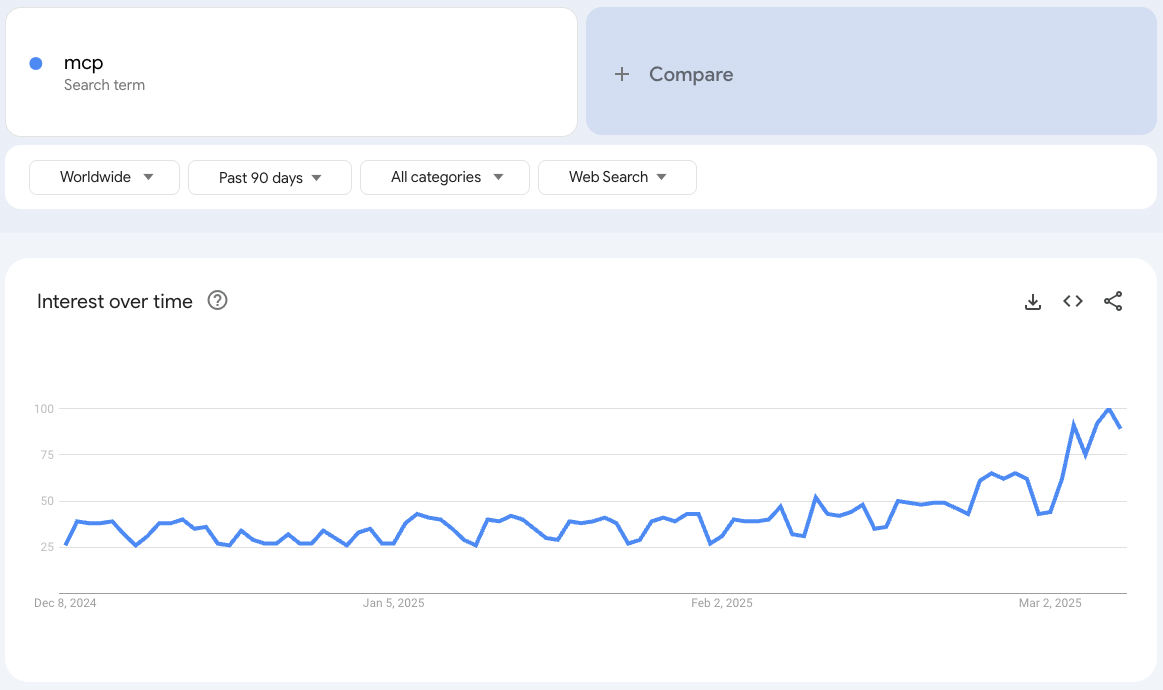

Why Is Everyone Talking About It?

Another excellent question. As I mentioned, the official release was at the end of 2024. So why did the topic take off now? One reason could be that Anthropic recently announced an official MCP registry API at an @aiDotEngineer event. Here is the recording of the workshop if you are interested in more.

Introducing this API means it will be much easier to discover official MCP servers now.

Another simple answer could be that adoption reached a certain point recently. However, my bet is on the previous point.

The Future of MCP

I just recently learned about the concept, but it already looks promising. Honestly, it looked like a solution looking for a problem at first sight, but as I learned more and more about it, I started to see the benefits.

Since it is a relatively new standard without too many alternatives (are there any?), it has the potential to become widespread, similar to how OpenAI's API became the de facto standard to serve model APIs.

Also, the more services will offer their own MCP implementation, the easier it will be for builders to integrate them. We already have countless examples on GitHub. Who will be the next?

I will keep an eye on the topic and return with a follow-up post later if it isn't just a passing fad.

You can find some simple implementation examples at the end of this post to see how you can spin up MCP in minutes locally.

Examples

MCP Server

Here is a dead-simple implementation of an MCP server that returns the price of a particular stock written in TypeScript.

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

import { z } from "zod";

const server = new McpServer({

name: "Stock Market Server",

version: "1.0.0"

});

server.tool("getStockPrice",

{ ticker: z.string() },

async ({ ticker }) => ({

content: [

{

type: "text",

text: `The price of ${ticker} is $182.47 currently.`,

},

],

}),

);

const transport = new StdioServerTransport();

await server.connect(transport);This is just an example. Naturally, that async function would go to a stock market API in a real implementation.

Note that in some cases, this server does not have to run constantly in the background. If you are using an app that supports MCP out of the box, it can spin up this server for itself whenever needed, retrieve information, or execute a task, and then shut it down again. This is precisely what Claude Code is doing if you configure it with the MCP server above on your local machine.

MCP Client

Here is a client that can spin up the server and use its tools when needed.

import { Client } from "@modelcontextprotocol/sdk/client/index.js";

import { StdioClientTransport } from "@modelcontextprotocol/sdk/client/stdio.js";

const transport = new StdioClientTransport({

command: "node",

args: ["server.js"]

});

const client = new Client(

{

name: "Stock Market Client",

version: "1.0.0"

},

{

capabilities: {

prompts: {},

resources: {},

tools: {}

}

}

);

await client.connect(transport);

const result = await client.callTool({

name: "getStockPrice",

arguments: {

ticker: "AAPL"

}

});

console.log(result);Running this client will print the following response:

{

content: [

{

type: "text",

text: "The price of AAPL is $182.47 currently.",

}

],

}Note that you don't have to manually start the MCP server to communicate with it. The client will start running it with stdio. You just have to tell the client how it can start the server, and it will handle the process automatically.

Running the above example is as simple as executing node client.js.