Negativity won't get you far in life, and I'm going to prove it to you. At least, that's the case sometimes when you are working with generative models.

There was a relatively famous post at the start of 2024 about ChatGPT not being able to generate a room without an elephant in it. The post became viral and reached around 3.7 million people at the time of writing this post. Most of the comments made fun of ChatGPT being completely incapable because of this, but there lies a much more profound lesson in that post: it reveals a technique I call Optimistic Prompting.

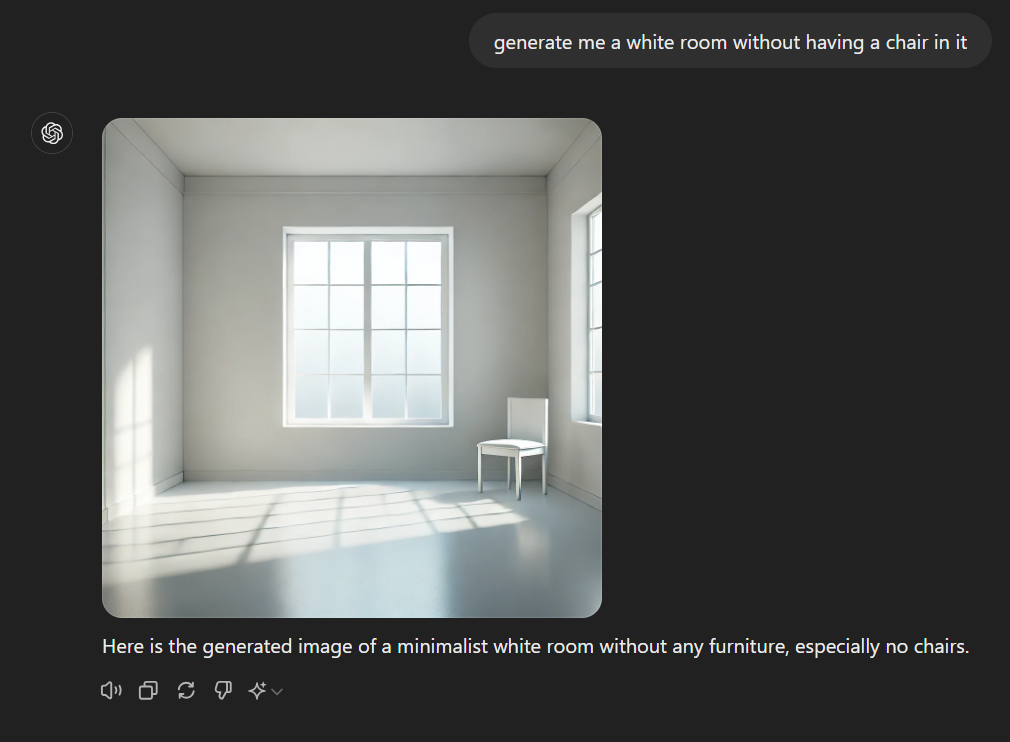

Absolutely No Chairs

It happened almost half a year ago, which is like a decade in the world of AI, and I wanted to double-check that this simple trick still works, so I created my version using a chair instead of an elephant.

The image speaks for itself, and the trick still works. However, DALL·E is actually an image generator that ChatGPT has access to. I wanted to know whether this technique would also work with language models and text-only outputs. Here is a real-world example that sparked my curiosity on the topic.

Don't Sound Too Robotic

I first encountered the same effect in language models when I was working on an internal application that generated some documents with the help of AI. One of the company's salespeople gave me a prompt I had to use during generation.

The prompt contained a long list of banned words such as streamline, elevate, delve, etc. - you know, common AI language. It was essentially a blocklist. At first sight, this prompt was exceptionally well-written as it contained extremely clear and detailed instructions with plenty of examples.

But when I tested it, the model's output was disastrous. It included almost half of the banned words from the list, and the overall style was barely enjoyable. I wondered why that happened, and then I remembered the elephant example, and it struck me.

What Is Optimistic Prompting?

Generative models work by taking everything that's in the context and generating something that looks like the most probable answer based on that. That's a brutal oversimplification, but it is enough for this post now. However, they lack semantic understanding, even though they mimic natural languages well. If you mention anything in your prompt, it will influence the output, whether it is a positive instruction or a negative one. Their understanding of negative words is limited in some cases. Telling the model to ignore, omit, avoid, or not do something will also work, but if you format your prompts this way, you must mention the items you don't want to see in the result. By doing that, they become part of the context and will influence the output.

Don't get me wrong — writing restrictions is a working technique and is actively used by many. However, if your restrictions get too long, they will behave like a prompt injection attack instead and can have the opposite effect of what you expected.

In that case, a better alternative could be to explain in detail what you need instead of listing plenty of items or behaviors you want the model to ignore or avoid doing. It is a simple concept but not at all natural in most cases. Here are two examples.

- Instead of telling the model not to use complicated words like streamline, elevate, and jumpstart, try telling it to use a simple language that the average understands.

- Instead of requesting an image of a room without any furniture, ask for an empty room.

In general (not just in AI), a blocklist is usually the wrong solution, as there will always be an edge case that your list doesn't contain. The same is true when prompting models. You can list hundreds of words the model should avoid using, but there will always be a synonym you will forget to mention. In the case of language models, it is even worse because by writing a blocklist, you essentially include all of the phrases you don't want to see in the context, making the result significantly worse.

An allowlist isn't trivial either because how can you list everything that's valid? But it is still fundamentally better. Applying it to language models is as simple as focusing only on what you need by including as many details in your prompt as possible without mentioning any parts you don't want to see. Writing prompts like this will significantly increase the likelihood of the expected result.

Be Positive

Diffusion and other image generation models have something called the negative prompt, which is precisely used to influence what you don't want to see in the image. However, language models do not have this luxury. You can only rely on a single prompt in their case.

It's worth mentioning that including restrictions in your prompts is a battle-tested and working technique. However, as with everything else, it is possible to overdo it, and you can quickly get yourself in a situation where the model does the exact opposite of what you wanted. In that case, Optimistic Prompting might be a great alternative. Or, in other words…

"Be positive. ☀️"